By: Geoff LaPorte, Professional Services Engineer

For Technologists working with AI, data quality is of the utmost importance. The accuracy and reliability of AI models are dependent on the data that’s used to train them. To ensure the best possible data, technologists rely on large crowds of contributors to help with data collection and annotation tasks. Crowd contributors not only help with data collection and effective data annotation, but their participation in the human-in-the-loop process of machine learning also ensures that diversity of thought, culture, and knowledge is also incorporated into AI models that are being built.

As we know, your model is only as good as your training data.

To ensure that the data output quality from these contributors is of the highest standard possible, it’s vital that you follow tried-and-true best practices when it comes to your Job Design. One of the primary ways in which you can do this is by optimizing your job instructions.

In this post, you can learn the best practices that you can follow when creating job instructions for data collection and effective data annotation jobs.

Why well-defined job instructions are an important step to increasing data quality for AI

- First Impressions Count: Whether it be for a Data Collection or Annotation job, instructions are the first thing contributors see when they open your job. It’s the first impression you make with the person whom you’re trusting to create quality data for your AI model. Just for this reason alone, paying extra attention to how you design your instructions page is important. Not only should it be well-written, but it should also be visually appealing and ‘easy on the eyes’ so to speak.

- Strive for Clarity: Poorly written job instructions suggest a lack of attention to detail and quality from the job owner, leading to confusion, frustration, and errors from the contributors. Clearly define expectations and give a range of examples for contributors to ensure high-quality output data for your AI model.

- Be Consistent: Juggling multiple jobs across the platform can be made simpler through consistency across job instructions and examples. Contributors will gain a deeper understanding of similar tasks and it will help in reducing their cognitive load. Ultimately improving efficiency, effectiveness, and helping to unlock throughput in your job.

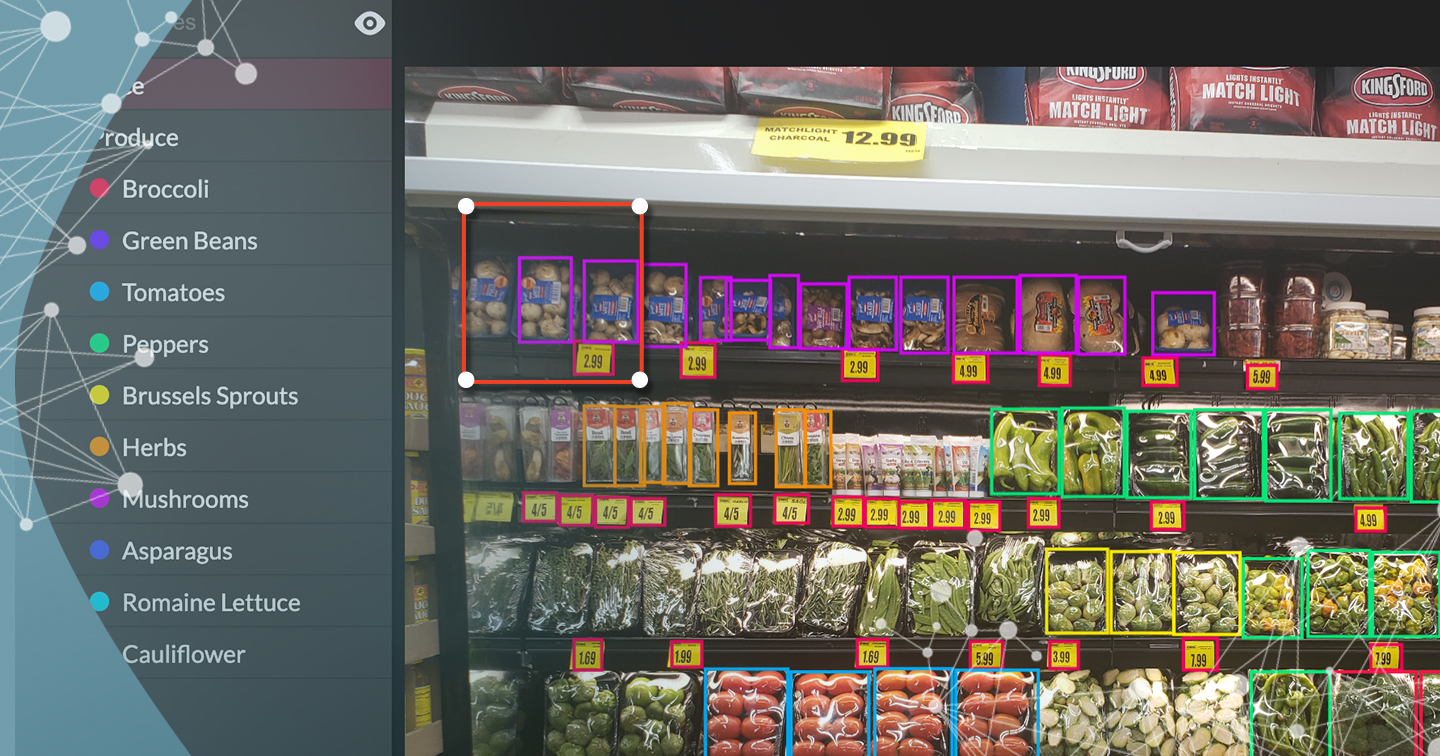

- Consider the Contributor: High-quality data depends on engaged contributors. Go beyond clear instructions and use examples and visuals in your job design. Don’t overlook important factors such as geographic location, language, cultural biases, and current events that may affect your contributors.

Examples of good and bad instructions

Best Practices to Create Job Instructions in Appen’s Data Annotation Platform (ADAP).

In Appen’s Data Annotation Platform (ADAP) Use these tips to provide a strong framework for effective data annotation and data collection job success.

Tips to write a good Overview:

A one to two-sentence job overview that effectively defines the task. This should provide a In Appen’s Data Annotation Platform (ADAP), job instructions are comprised of 4 areas Overview, Steps, Rules/Tips, and Examples.

- High-level overview of the job’s end goal.

- Consider the demographics, languages and experience of the contributors. ADAP jobs that have had success leverage simplified wording which is scalable and helps the contributors understand the requirements more thoroughly.

Tips to define Steps:

- A task is broken into logical steps, and each step should describe the actions that each contributor should take.

- Do not provide definitions or rules in the step.

- The steps should follow the job design control flow of the job. In other words – the sequence in which a response to an answer will trigger a subsequent or relevant question in the job design

Tips to write Rules and Tips:

- Use this section to clarify areas of potential confusion.

- Make sure you’re using all relevant definitions, context, and resources for this step in the instructions.

- Goal is to answer how the crowd is expected to annotate in the task

Tips to provide Examples:

- Address common cases and edge cases

- Provide 3 or more examples

- Provide examples from the source data to help the contributors fully understand the task

Other Tips:

- Highlight key words and definitions, can also bold and italicise text

- For new jobs, find a relevant template and use the boilerplate instructions as a starting point

- Iterate over the instructions

- Add relevant examples and edge cases that are commonly missed

- Update definitions or add keywords that will help reduce confusion

To achieve high data quality within your data collection job, it’s important that job instructions are detailed, well-written, concise, and use simple vocabulary with references and visuals.

We hope these best practices help you to achieve higher data quality for your AI model.