In their presentation Creating Autonomy for Social Robots, Dylan Glas, Senior Robotics Software Architect at Futurewei Technologies, and Figure Eight Machine Learning Scientist Phoebe Liu addressed the challenges faced in developing robots that have human-level depth and nuance in their social interactions.

In their presentation Creating Autonomy for Social Robots, Dylan Glas, Senior Robotics Software Architect at Futurewei Technologies, and Figure Eight Machine Learning Scientist Phoebe Liu addressed the challenges faced in developing robots that have human-level depth and nuance in their social interactions.

Goals for creating more social robots

The researchers focused their efforts with a few key goals in mind for developing more socially intelligent robots:- Interact with people as peers rather than devices

- Communicate using both speech and gesture

- Provide useful services that a would normally require a human being

How do we do this?

Glas and Liu set out to identify ways to train machine learning models based on real-world scenarios, full of ambiguity and variety. By focusing their research in this way, they hoped to: 1. Learn about people: Learn how people behave to gain the ability to anticipate and respond to them 2. Learn from people: Learn social behaviors from people’s explicit and implicit knowledge 3. Learn with people: Improve and personalize interaction logic through real interactions Let’s go to the mall

Let’s go to the mall

To learn more about people, the team decided to use interactions over two years at a shopping mall in Japan. The goal of this experiment is to train a machine learning model to approach people and offer directions or recommendations for shops to visit. Glas and Liu identified several potential obstacles:

- Robots need to move slowly for safety purposes and so may not approach people with the speed required to capture their attention.

- Sensing is short-range so robots would need to be close enough to a shopper to gather required data about them to make appropriate decisions.

- Many people are simply too busy or uninterested to interact with a robot.

In addition to trajectory data, Glas and Liu suggested a flowchart-based visual programming language with blocks representing back-end functions like talk, shake hands, etc. could be used the robot’s interactions once a person had been approached and engaged. Using this strategy, they hypothesized that content authors could easily combine speech, gesture, and emotion to manage dialogue and build engaging interactions.

In addition to trajectory data, Glas and Liu suggested a flowchart-based visual programming language with blocks representing back-end functions like talk, shake hands, etc. could be used the robot’s interactions once a person had been approached and engaged. Using this strategy, they hypothesized that content authors could easily combine speech, gesture, and emotion to manage dialogue and build engaging interactions.

Crowdsourcing human behavior for data-driven human-robot interactions (HRI)

During their presentation, Liu and Glas further discussed the benefits and challenges of crowdsourcing the work of training better social robots. Annotating social behavior can be difficult because it is often subjective and fuzzy. In other words, if we can’t even clearly articulate the reason for why we adhere to certain social rules. It is also challenging to communicate instructions to workers to accurately annotate the data.The camera shop experiment

To explore how they might address these crowdsourcing challenges, Glas and Liu set up a simulation in a camera shop where participants role played as either a customer or a shopkeeper and spoke and acted naturally without any script. Key aspects of the experiment included:- Multimodal interaction with speech, locomotion, and proxemics formation

- A human position tracking system, based on RGB-D depth sensors, reports people’s movement in terms of X, Y every second

- Role-players also carried an android phone for speech recognition to compare performance

One thing the experiment showed is that natural conversation is very noisy; even the state-of-the-art speech recognition system reported only correct match or very minor errors in 53% of the interactions. A large amount of speech data contained some minor errors or major errors, or was totally unrecognizable. Another key finding was that there are natural variations that arise from person to person, even though they all represent the same action. For example, people use different phrases to say things that are semantically similar. But even though there are natural human variations in behavior, at its core, there are some commonly repeatable patterns people usually perform, meaning it’s possible to abstract data into representations of typical behavior elements.

By introducing subtle personality changes in the role-playing experiment, Glas and Liu were able to go deeper into the subject for more nuanced data. For example, a shopkeeper was simulated using training examples with two people: one who had a quiet, shy personality, and another who was quite outgoing. These role players were asked to act as a passive and proactive shopkeeper respectively to see if it was possible to capture their interaction styles from the training data.

One thing the experiment showed is that natural conversation is very noisy; even the state-of-the-art speech recognition system reported only correct match or very minor errors in 53% of the interactions. A large amount of speech data contained some minor errors or major errors, or was totally unrecognizable. Another key finding was that there are natural variations that arise from person to person, even though they all represent the same action. For example, people use different phrases to say things that are semantically similar. But even though there are natural human variations in behavior, at its core, there are some commonly repeatable patterns people usually perform, meaning it’s possible to abstract data into representations of typical behavior elements.

By introducing subtle personality changes in the role-playing experiment, Glas and Liu were able to go deeper into the subject for more nuanced data. For example, a shopkeeper was simulated using training examples with two people: one who had a quiet, shy personality, and another who was quite outgoing. These role players were asked to act as a passive and proactive shopkeeper respectively to see if it was possible to capture their interaction styles from the training data.

Learning from human behavior is critical for developing autonomous robots for the real world

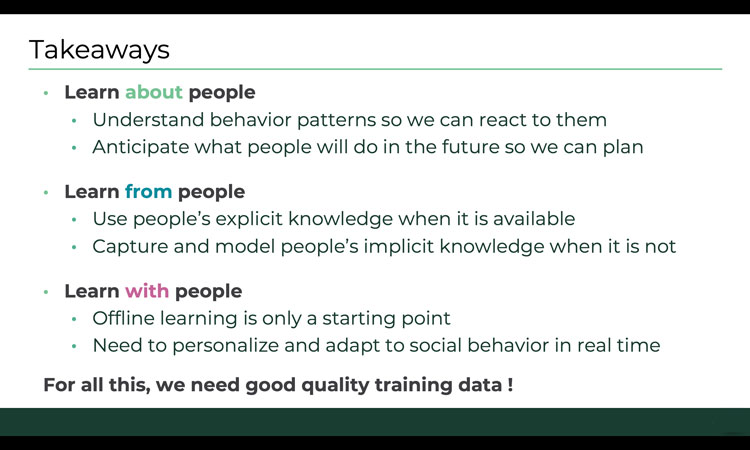

Glas and Liu wrapped up their presentation by highlighting some key takeaways from their research:- Understand behavior patterns so we can react to them and anticipate what people will do in the future so we can plan.

- Use people’s explicit knowledge when it is available and capture and model people’s implicit knowledge when it is not.

- Offline learning is only a starting point and there is a need for personalizing and adapting to social behavior in real time.

Let’s go to the mall

Let’s go to the mall What’s next?

What’s next?