Determining How Much Training Data You Need

There are a lot of factors in play for deciding how much machine learning training data you need. First and foremost is how important accuracy is. Say you’re creating a sentiment analysis algorithm. Your problem is complex, yes, but it’s not a life or death issue. A sentiment algorithm that achieves 85 or 90% accuracy is more than enough for most people’s needs and a false positive or negative here or there isn’t going to substantively change much of anything. Now, a cancer detection model or a self-driving car algorithm? That’s a different story. A cancer detection model that could miss important indicators is literally a matter of life or death. Of course, more complicated use cases generally require more data than less complex ones. A computer vision that’s looking to only identify foods versus one that’s trying to identify objects generally will need less training data as a rule of thumb. The more classes you’re hoping your model can identify, the more examples it will need. Note that there’s really no such thing as too much high quality data. Better training data, and more of it, will improve your models. Of course, there is a point where the marginal gains of adding more data are too small, so you want to keep an eye on that and your data budget. You need to set the threshold for success, but know that with careful iterations, you can exceed that with more and better data.Preparing Your Training Data

The reality is, most data is messy or incomplete. Take a picture for example. To a machine, an image is just a series of pixels. Some might be green, some might be brown, but a machine doesn’t know this is a tree until it has a label associated with it that says, in essence, this collection of pixels right here is a tree. If a machine sees enough labeled images of a tree, it can start to understand that similar groupings of pixels in an unlabeled image also constitute a tree. So how do you prepare training data so that it has the features and labels your model needs to succeed? The best way is with a human-in-the-loop. Or, more accurately, humans-in-the-loop. Ideally, you’ll leverage a diverse group of annotators (in some cases, you may need domain experts) who can label your data accurately and efficiently. Humans can also look at an output–say, a model’s prediction about whether an image is in fact a dog–and verify or correct that output (i.e. “yes, this is a dog” or “no, this is a cat”). This is known as ground truth monitoring and is part of the iterative human-in-the-loop process. The more accurate your training data labels are, the better your model will perform. It can be helpful to find a data partner that can provide annotation tools and access to crowd workers for the often time-consuming data labeling process.Testing and Evaluating Your Training Data

Typically, when you’re building a model, you split your labeled dataset into training and testing sets (though, sometimes, your testing set may be unlabeled). And, of course, you train your algorithm on the former and validate its performance on the latter. What happens when your validation set doesn’t give you the results you’re looking for? You’ll need to update your weights, drop or add labels, try different approaches, and retrain your model. When you do this, it’s incredibly important to do it with your datasets split in the exact same way. Why is that? It’s the best way to evaluate success. You’ll be able to see the labels and decisions it has improved on and where it’s falling flat. Different training sets can lead to markedly different outcomes on the same algorithm, so when you’re testing different models, you need to use the same training data to truly know if you’re improving or not. Your training data won’t have equal amounts of every category you’re hoping to identify. To use a simple example: if your computer vision algorithm sees 10,000 instances of a dog and only 5 of a cat, chances are, it’s going to have trouble identifying cats. The important thing to keep in mind here is what success means for your model in the real world. If your classifier is just trying to identify dogs, then its low performance on cat identification is probably not a deal-breaker. But you’re going to want to evaluate model success on the labels you’ll need in production. What happens if you don’t have enough information to reach your desired accuracy level? Chances are, you’ll need more training data. Models built on a few thousand rows are generally not robust enough to be successful for large-scale business practices.Training Data FAQs

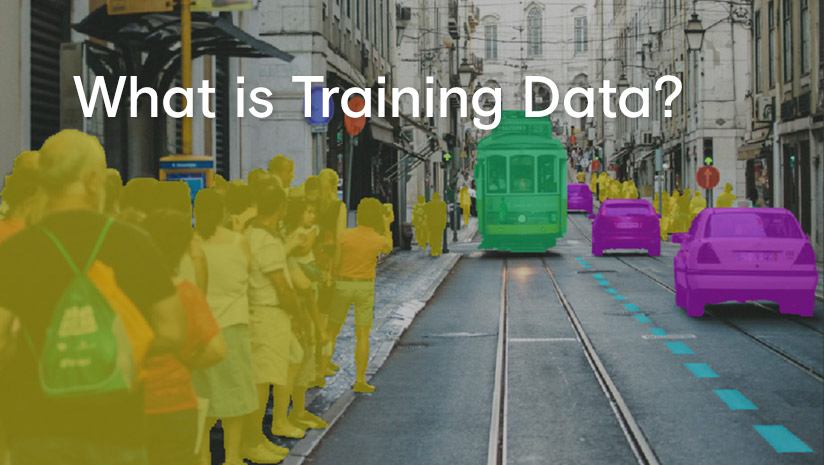

The following are several frequently asked questions when it comes to training data in machine learning:What is training data?

- Neural networks and other artificial intelligence programs require an initial set of data, called a training dataset, to act as a baseline for further application and utilization. This dataset is the foundation for the program’s growing library of information. The training dataset must be accurately labeled before the model can process and learn from it.

How do I annotate my training dataset?

- There are several options available for annotating your training set. You can choose to rely on internal members of your organization, hire contractors, or work with a third party data provider that can provide access to a crowd of workers for labeling purposes. The method you choose will depend on the resources you have available and the use case your solution pertains to.

What is a test set?

- You need both training and testing data to build an ML algorithm. Once a model is trained on a training set, it’s usually evaluated on a test set. Oftentimes, these sets are taken from the same overall dataset, though the training set should be labeled or enriched to increase an algorithm’s confidence and accuracy.

How should you split up a dataset into test and training sets

- Generally, training data is split up more or less randomly, while making sure to capture important classes you know up front. For example, if you’re trying to create a model that can read receipt images from a variety of stores, you’ll want to avoid training your algorithm on images from a single franchise. This will make your model more robust and help prevent it from overfitting.

How do I make sure my training data isn’t biased?

- This is an important question as companies work toward making AI more ethical and effective for everyone. Bias can be introduced at many stages of the AI building process, so you should mitigate it at every step of the way. When you collect your training data, be sure your data is representative of all use cases and end users. You’ll want to ensure you have a diverse group of people labeling your data and monitoring model performance as well, to reduce the chance of bias at this stage. Finally, include bias as a measurable factor in your key performance indicators.

How much training data is enough?

- There’s really no hard-and-fast rule around how much data you need. Different use cases, after all, will require different amounts of data. Ones where you need your model to be incredibly confident (like self-driving cars) will require vast amounts of data, whereas a fairly narrow sentiment model that’s based off text necessitates far less data. As a general rule of thumb though, you’ll need more data than you’re assuming you will.

What is the difference between training data and big data?

- Big data and training data are not the same thing. Gartner calls big data “high-volume, high-velocity, and/or high-variety” and this information generally needs to be processed in some way for it to be truly useful. Training data, as mentioned above, is labeled data used to teach AI models or machine learning algorithms.