Sensor Fusion Shapes the Future of Connected Devices

Sensors are used in almost every industry now: they’re found in our cars, in our factories, and even in our smartphones. While an individual sensor may provide useful data on its own, imagine the information that could be extracted from combining output from multiple sensors at once. This would give us a much better model of the world around us, assuming the whole is greater than the sum of its parts. Sensor fusion is the process through which we can accomplish this feat. Specifically, sensor fusion is the process of merging data from multiple sensors to create a more accurate conceptualization of the target scene or object. The idea behind it is that each individual sensor has both strengths and weaknesses; the goal is to leverage the strengths of each and reduce any uncertainty to obtain a precise model of the environment being studied.What are the Different Types of Sensors?

First, let’s define the various types of sensors used in a sensor fusion process. An autonomous car, for example, uses many different sensors to navigate its environment. Here are several of the most common sensor technologies:Camera

A camera captures images of a scene, which can then be used to identify objects within. Their weakness is that the image can easily be obscured by darkness, poor weather, dirt, etc.Radar

A radar sensor emits radio waves to detect objects and precisely estimate their speeds. It uses a function called radial velocity to measure changes in wave frequencies to determine if something is moving toward it or away. Unlike a camera, it can’t be used to indicate what objects are being sensed by it.LiDAR

Standing for light detection and ranging, LiDAR uses infrared sensors to measure the distance between a target object and the sensors. The sensors send out waves and measure the time it takes for the waves to bounce off an object and return. This data is then used to create a 3D point cloud of the environment. The disadvantage of LiDAR is that it doesn’t have a very long range and also isn’t nearly as affordable as cameras or radar.Ultrasonic Sensor

Ultrasonic sensors can estimate the position of a target object within a few meters.Odometric Sensor

This sensor can help predict a vehicle’s position and orientation relative to a known starting point if the wheel speeds are measured.The Three Categories of Sensor Fusion

Generally speaking, there are three different approaches, or types, of sensor fusion.Complementary

This type of sensor fusion consists of independent sensors that aren’t dependent on one another, but when their output is combined, create a more complete image of a scene. For instance, several cameras placed around a room and focused on different parts of the room can collectively provide a picture of what the room looks like. The advantage of this type is that it typically offers the greatest level of accuracy.Competitive/Redundant

When sensor fusion is set up in a competitive arrangement, sensors provide independent measurements of the same target object. In this category, there are two configurations: one is the fusion of data from independent sensors, and the other is the fusion of data from a single sensor taken at separate instances. This category gives the highest level of completeness of the three types.Cooperative

The third type of sensor fusion is called cooperative. It involves two independent sensors providing data that when taken together, delivers information that wouldn’t be available from a single sensor. For instance, in the case of stereoscopic vision, two cameras at slightly different viewpoints provide data that can collectively form a 3D image of the target object. This is the most difficult of the three categories to use as the results are especially sensitive to errors from the individual sensors. But it’s advantage is its ability to provide a unique model of a scene or target object. One should note that many uses of sensor fusion leverage more than one of the three types to create the most accurate result possible. There are also three communication schemes used in sensor fusion; they include:- Decentralized: No communication occurs between the sensor nodes.

- Centralized: Sensors communicate to a central node.

- Distributed: Sensor nodes communicate at set intervals.

Algorithms in Sensor Fusion

To merge the data in sensor fusion applications, data scientists need to use an algorithm. Perhaps the most popular algorithm in sensor fusion is known as the Kalman filter. In general, though, there are three levels of sensor fusion that data scientists explore.Three Levels of Sensor Fusion

The output of the sensors requires post-processing, the level of which will vary. The level chosen impacts data storage needs as well as accuracy of the model. Here’s a summary of the three levels:- Low-Level: At a low level, sensor fusion takes the raw output of the sensors to ensure we’re not unintentionally introducing noise in the data during transformation. The disadvantage of this method is it requires processing quite a bit of data.

- Mid-Level: Instead of using raw data, mid-level sensor fusion uses data that’s already been interpreted by either the individual sensor or by a separate processor. It leverages hypotheses on the object’s position, weighting various interpretations to arrive at a singular answer.

- High-Level: Similar to mid-level, high-level sensor fusion weights hypotheses to achieve an answer on the object’s position. However, it also uses this method to identify an object’s trajectory.

The Kalman Filter

Invented as far back as 1960, the Kalman filter is present in our smartphones and satellites, and commonly used for navigation purposes. Its purpose is to estimate the current state of a dynamic system (although it can also estimate the past, known as smoothing, and the future, known as prediction). It’s especially helpful when parsing through noisy data; for instance, sensors on autonomous vehicles sometimes capture incomplete or noisy data that can then be corrected by the Kalman algorithm. The Kalman filter is a form of a Bayesian filter. In Bayesian filtering, the algorithm alternates between the prediction (an estimate of what the current state is) and the update (the observations of the sensors). Essentially the algorithm will take the prediction and correct it according to the update, cycling through these two steps until reaching desired accuracy. The Kalman filter makes predictions in real-time using mathematical modeling based on the state (which includes position and speed) and uncertainty. It works with linear functions, but some sensor output, like radar data, isn’t linear. In this case, data scientists rely on two approaches to linearize the model:- Extended Kalman Filter: Uses Jacobian and Taylor series to linearize.

- Unscented Kalman Filter: Uses a more precise approximation to linearize.

Other Algorithms in Sensor Fusion

In addition to the Kalman Filter, data scientists may choose to apply other algorithms to sensor fusion. They include: Neural Networks: A neural network is used in deep learning to fuse image data from multiple sensors in order to classify results. Central Limit Theorem (CLT): Using a bell curve representation in most cases, a CLT algorithm will average data from multiple sensors. Bayesian Algorithms: We mentioned that the Kalman Filter is one type of Bayesian filter, but there are others. For example, the Dempster-Schafer algorithm uses measurements of uncertainty and inferences to mimic human reasoning.Sensor Fusion and Autonomous Vehicles

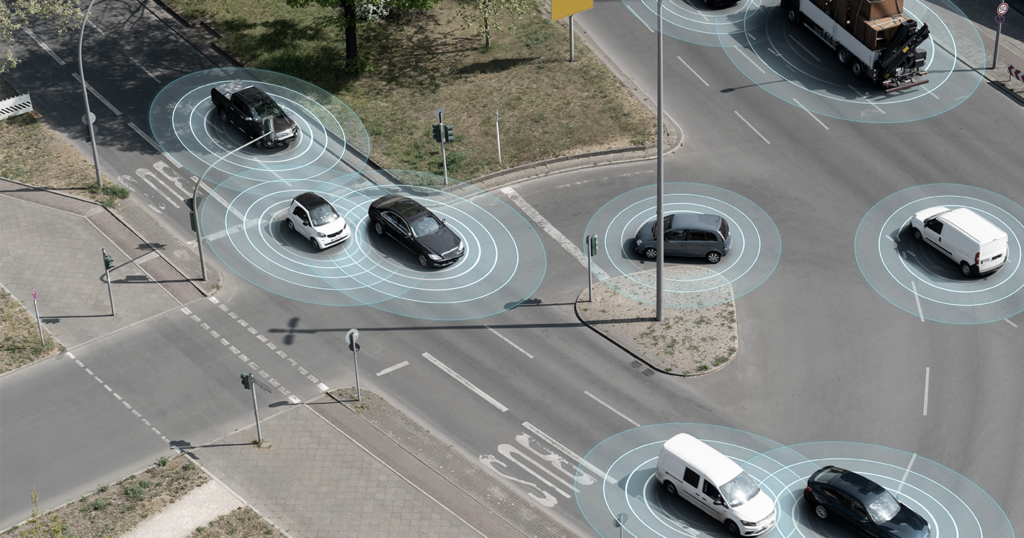

Sensor fusion is critically important to the field of autonomous vehicles. Fusion algorithms help vehicles navigate through obstacles by understanding their precise location, how fast they’re moving, and in what direction. In other words, sensor fusion maximizes the safety of autonomous vehicles. Let’s talk about how it works. A vehicle will take in input from cameras, radar, and LiDAR sensors to form a single model of its surrounding environment. Sensor fusion occurs in the practice of merging this data into that singular model. We mentioned previously how these different sensors work, as well as what their strengths and weaknesses are. Combined, their strengths can paint a highly accurate picture of a vehicle’s environment. This picture can then be used to inform navigation decisions on where to go and how fast. Sometimes, autonomous vehicles will leverage data pulled from inside the car as well, in what’s known as interior and exterior sensor fusion. The more sensor inputs the vehicle collects, the more complicated it can be to use sensor fusion. However, the tradeoff is that if done correctly, the resulting model will generally be more accurate the more sensor data is used. One example of the power of sensor fusion in automotive is in preventing skidding. Leveraging a combination of sensor inputs from the steering wheel’s orientation, a gyroscope, and an accelerometer, a sensor fusion algorithm can determine if the car is in the process of a skid and what direction the driver intends for the vehicle to go. In this scenario, an autonomous vehicle could then automatically pump the brakes to prevent further skidding.A Case Study: Appen’s Work with Autonomous Vehicles

Appen works with seven out of ten of the largest automotive manufacturers in the world, supplying them with high-quality training data. These complex, multi-modal projects must achieve as close to 100% accuracy as possible to ensure the vehicle can operate under any number of conditions.“It isn’t enough for vehicles to perform well in simulated or good weather conditions in one type of topography. They must perform flawlessly in all weather conditions in every imaginable road scenario they will encounter in real world deployments. This means that teams working on the machine learning model for the vehicle’s AI must focus on getting training data with the highest possible accuracy before being able to deploy on the road.” – Wilson Pang, CTO, AppenIn Appen’s work on autonomous vehicle projects, we’ve recognized that there are considerable quality challenges when sourcing and aggregating data from multiple sensor types. Poor quality training data, if caught early, requires much wasted time spent determining which components of the dataset need improvement. If caught later, the results will be seen in poor-performing models, and a self-driving car that cannot complete testing with the needed levels of accuracy (then it’s back to the drawing board). As a result, Appen has extensive auditing tools to monitor annotated data and help auto manufacturers get as close to 100% accuracy as possible for the future safety of their customers. As a specific example of sensor fusion, some auto manufacturers that Appen works with need to often merge two datasets with different dimensions. This is a difficult task, one that isn’t easily done without the right technology. To solve this, Appen’s platform can provide 3D point cloud annotation with object tracking by over 99% at the cuboid level. What this means is that our clients can annotate their datasets with 2D images bound to one with 3D point cloud annotations with the purpose of mapping across multiple dimensions. Our technology serves as an example of how leveraging tools and third-party expertise can help teams accomplish complex, multi-modal AI endeavors.